Detecting marine heatwave based on observation

Contents

Detecting marine heatwave based on observation#

What is marine heatwaves#

The marine heatwaves are anomalous warm water over the ocean. To detect warm ocean water, sea surface temperature (SST) is used to define if there is any marine heatwave event.

Goals in the notes#

Extract the data from the PSL OPeNDAP server

Calculate the SST climatology

Calculate the SST anomaly

Determine the SST threshold based on the anomaly

Identify the marine heatwaves based on threshold

Note

The following example is based on the paper Jacox et al., 2022.

Extract the data from the PSL OPeNDAP server#

In this notebook, we demonstrate how to use the NOAA OISST v2 High-resolution dataset to show the observed marine heatwaves. The dataset is currently hosted on NOAA Physical Sciences Laboratory.

Python packages#

The module imported to the python kernel is shown below

import warnings

import datetime

import xarray as xr

import numpy as np

warnings.simplefilter("ignore")

Tip

This line of code is not affecting the execution but just removing some of the warning output that might clutter your notebook. However, do pay attention to some of the warnings since they will indicate some deprecation of function or arg/kwarg in future update.

Lazy loading the dataset through OPeNDAP#

With the power of Xarray and Dask, we can load the data lazily (only loading the metadata and coordinates information) and peek at the data’s dimension and availability on our local machine. The actual data (SST values at each grid point in this case) will only be downloaded from the PSL server when further data manipulation (subsetting and aggregation like calculating mean) is needed.

opendap_mon_url = "https://psl.noaa.gov/thredds/dodsC/Datasets/noaa.oisst.v2.highres/sst.mon.mean.nc"

ds_mon = xr.open_dataset(opendap_mon_url, engine='pydap', chunks={'time':12,'lon':-1,'lat':-1})

Important

The chunks keyword argument in the open_dataset is the key to your processing speed and how one avoids the download limit of the OPeNDAP server.

The engine keyword argument is set to 'pydap' to utilize the pydap backend to grab the data on an OPeNDAP server.

What is chunk?#

Dask has great documentation for the concept ‘chunks’. The basic idea is that a single netCDF file can be separated into multiple chunks (e.g. 20(lon)x20(lat) into four chunks of 10(lon)x10(lat)).

By reading each chunk at a time as needed, we do not have to load the entire dataset into the memory.

This chunk-by-chunk reading is performed by Dask in the background.

The only thing user needs to do to activate this function is to read the netCDF file using the xr.open_dataset() method with the keyword argument chunks={'time':12,'lon':-1,'lat':-1} provided.

The chunk reading approach provide the opportunity to reduce the memory usage on the local machine during the calculation, the possibility of parallelizing the processes, and side-stepping the data download limit set by the OPeNDAP server (PSL server has a 500MB limit).

The dataset is loaded lazily (only metadata and coordinates) shown below.

ds_mon

<xarray.Dataset>

Dimensions: (time: 497, lat: 720, lon: 1440)

Coordinates:

* time (time) datetime64[ns] 1981-09-01 1981-10-01 ... 2023-01-01

* lat (lat) float32 -89.88 -89.62 -89.38 -89.12 ... 89.38 89.62 89.88

* lon (lon) float32 0.125 0.375 0.625 0.875 ... 359.1 359.4 359.6 359.9

Data variables:

sst (time, lat, lon) float32 dask.array<chunksize=(12, 720, 1440), meta=np.ndarray>

Attributes:

Conventions: CF-1.5

title: NOAA/NCEI 1/4 Degree Daily Optimum Inter...

institution: NOAA/National Centers for Environmental ...

source: NOAA/NCEI https://www.ncei.noaa.gov/data...

References: https://www.psl.noaa.gov/data/gridded/da...

dataset_title: NOAA Daily Optimum Interpolation Sea Sur...

version: Version 2.1

comment: Reynolds, et al.(2007) Daily High-Resolu...

_NCProperties: version=2,netcdf=4.7.0,hdf5=1.10.5,

DODS_EXTRA.Unlimited_Dimension: timeIn our example, we set the size of each chunk to be 12(time)x1440(lon)x720(lat) which is equal to 47.46 MB of data while the entire dataset is 1.39 GB. This allows us to get data in 47.46 MB chunk per download request.

Calculate the SST climatology#

First, we need to define the period that we are going to use to calculate the climatology. Here, we picked the 2010-2020 period to calculate the climatology.

Important

For a more accurate and scientifically valid estimate of marine heatwaves, one should usually consider a climatology period of at least 30 years. Here we set the climatology period from 2010 to 2020 (10 years) to speed up the processing time and for demonstration only. The shorter period (less memory consumption) also makes the interactive notebook launch on this page available for the user to manipulate and play with the dataset.

climo_start_yr = 2010 # determine the climatology/linear trend start year

climo_end_yr = 2020 # determine the climatology/linear trend end year

ds_mon_crop = ds_mon.where((ds_mon['time.year']>=climo_start_yr)&

(ds_mon['time.year']<=climo_end_yr),drop=True)

ds_mon_crop

<xarray.Dataset>

Dimensions: (time: 132, lat: 720, lon: 1440)

Coordinates:

* time (time) datetime64[ns] 2010-01-01 2010-02-01 ... 2020-12-01

* lat (lat) float32 -89.88 -89.62 -89.38 -89.12 ... 89.38 89.62 89.88

* lon (lon) float32 0.125 0.375 0.625 0.875 ... 359.1 359.4 359.6 359.9

Data variables:

sst (time, lat, lon) float32 dask.array<chunksize=(8, 720, 1440), meta=np.ndarray>

Attributes:

Conventions: CF-1.5

title: NOAA/NCEI 1/4 Degree Daily Optimum Inter...

institution: NOAA/National Centers for Environmental ...

source: NOAA/NCEI https://www.ncei.noaa.gov/data...

References: https://www.psl.noaa.gov/data/gridded/da...

dataset_title: NOAA Daily Optimum Interpolation Sea Sur...

version: Version 2.1

comment: Reynolds, et al.(2007) Daily High-Resolu...

_NCProperties: version=2,netcdf=4.7.0,hdf5=1.10.5,

DODS_EXTRA.Unlimited_Dimension: timeTo calculate the SST monthly climatology, we can utilize the groupby method from Xarray.

ds_mon_climo = ds_mon_crop.groupby('time.month').mean()

Calculate the SST anomaly#

After the climatology is determined, we subtract the climatology from the original data to get the anomaly.

ds_mon_anom = (ds_mon_crop.groupby('time.month')-ds_mon_climo).compute()

ds_mon_anom.sst

<xarray.DataArray 'sst' (time: 132, lat: 720, lon: 1440)>

array([[[ nan, nan, nan, ...,

nan, nan, nan],

[ nan, nan, nan, ...,

nan, nan, nan],

[ nan, nan, nan, ...,

nan, nan, nan],

...,

[-3.1319499e-02, -2.7741909e-02, -2.6187658e-02, ...,

-2.6598334e-02, -2.7155399e-02, -3.1085014e-02],

[-2.4809241e-02, -1.8386960e-02, -1.6305089e-02, ...,

-1.4369369e-02, -1.4985323e-02, -2.3841619e-02],

[-5.4544210e-03, -5.9822798e-03, -6.4514875e-03, ...,

-8.2111359e-03, -7.2433949e-03, -6.1584711e-03]],

[[ nan, nan, nan, ...,

nan, nan, nan],

[ nan, nan, nan, ...,

nan, nan, nan],

[ nan, nan, nan, ...,

nan, nan, nan],

...

[ 5.2757502e-02, 4.2848587e-02, 3.7666678e-02, ...,

2.0515203e-02, 2.8000116e-02, 4.2333364e-02],

[ 3.1303048e-02, 9.6969604e-03, -1.5139580e-04, ...,

-6.9090128e-03, -9.0956688e-05, 2.0666718e-02],

[-3.4575701e-02, -3.4575701e-02, -2.1000028e-02, ...,

-2.6000023e-02, -3.4575701e-02, -3.4575701e-02]],

[[ nan, nan, nan, ...,

nan, nan, nan],

[ nan, nan, nan, ...,

nan, nan, nan],

[ nan, nan, nan, ...,

nan, nan, nan],

...,

[ 1.6598463e-02, 8.3285570e-03, 4.1640997e-03, ...,

7.9178810e-04, 3.4604073e-03, 1.1348963e-02],

[ 6.4809322e-03, -8.3577633e-03, -1.6686082e-02, ...,

-1.5073180e-02, -1.3401747e-02, 1.7619133e-04],

[-3.0703783e-02, -3.0703783e-02, -3.0703783e-02, ...,

-3.0703783e-02, -3.0703783e-02, -3.0703783e-02]]], dtype=float32)

Coordinates:

* time (time) datetime64[ns] 2010-01-01 2010-02-01 ... 2020-12-01

* lat (lat) float32 -89.88 -89.62 -89.38 -89.12 ... 89.38 89.62 89.88

* lon (lon) float32 0.125 0.375 0.625 0.875 ... 359.1 359.4 359.6 359.9

month (time) int64 1 2 3 4 5 6 7 8 9 10 11 ... 2 3 4 5 6 7 8 9 10 11 12Attention

Notice the .compute() method in the code above.

The data of SST is only loaded chunk-by-chunk, cropped to the desired period, averaged in the group of months, and finally subtracted the climatology from the original data when we execute the .compute() line.

All these tasks are now executed in the background with a distributed server assigning tasks to different CPUs.

Determine the SST threshold based on the anomaly#

If the monthly threshold is based on the same month (e.g. January threshold is determined by all January SST anomalies), the monthly threshold can be calculated using a oneliner.

ds_mon_anom.sst.groupby('time.month').quantile(0.95,dim='time')

<xarray.DataArray 'sst' (month: 12, lat: 720, lon: 1440)>

array([[[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

...,

[0.02835792, 0.03064525, 0.03655434, ..., 0.03759527,

0.03155434, 0.0287537 ],

[0.02761012, 0.0340324 , 0.03611428, ..., 0.03805 ,

0.03743404, 0.02857774],

[0.04567462, 0.04514676, 0.04467756, ..., 0.04695022,

0.04388565, 0.04497057]],

[[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

...

[0.0959242 , 0.07951522, 0.07316673, ..., 0.05734849,

0.06800008, 0.0875001 ],

[0.06330305, 0.03903037, 0.03834862, ..., 0.03659099,

0.04340905, 0.05600005],

[0.05225766, 0.05225766, 0.04883337, ..., 0.05050004,

0.05225766, 0.05225766]],

[[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

...,

[0.07546937, 0.05752206, 0.05255127, ..., 0.05224347,

0.05668616, 0.07215536],

[0.04615831, 0.03131968, 0.03976554, ..., 0.03847522,

0.03256601, 0.0459826 ],

[0.05300587, 0.05300587, 0.05300587, ..., 0.05300587,

0.05300587, 0.05300587]]], dtype=float32)

Coordinates:

* lat (lat) float32 -89.88 -89.62 -89.38 -89.12 ... 89.38 89.62 89.88

* lon (lon) float32 0.125 0.375 0.625 0.875 ... 359.1 359.4 359.6 359.9

quantile float64 0.95

* month (month) int64 1 2 3 4 5 6 7 8 9 10 11 12However, based on the research, the threshold is determined based on a three month window with the center month being the monthly threhold one need to determined (e.g. January threshold is determined by all December, January, Feburary SST anomalies). Therefore, the function below is written to perform the three months window percentile operation.

########## Functions #########

# Function to calculate the 3 month rolling Quantile

def mj_3mon_quantile(da_data, mhw_threshold=90.):

da_data_quantile = xr.DataArray(coords={'lon':da_data.lon,

'lat':da_data.lat,

'month':np.arange(1,13)},

dims = ['month','lat','lon'])

for i in range(1,13):

if i == 1:

mon_range = [12,1,2]

elif i == 12 :

mon_range = [11,12,1]

else:

mon_range = [i-1,i,i+1]

da_data_quantile[i-1,:,:] = (da_data

.where((da_data['time.month'] == mon_range[0])|

(da_data['time.month'] == mon_range[1])|

(da_data['time.month'] == mon_range[2]),drop=True)

.quantile(mhw_threshold*0.01, dim = 'time', skipna = True))

return da_data_quantile

%time da_mon_quantile = mj_3mon_quantile(ds_mon_anom.sst, mhw_threshold=90)

CPU times: user 10min 28s, sys: 1.51 s, total: 10min 29s

Wall time: 10min 33s

Tip

The %time command is jupyter cell magic to time the one-liner cell operation.

It provides a great way to find the bottleneck of your data processing steps.

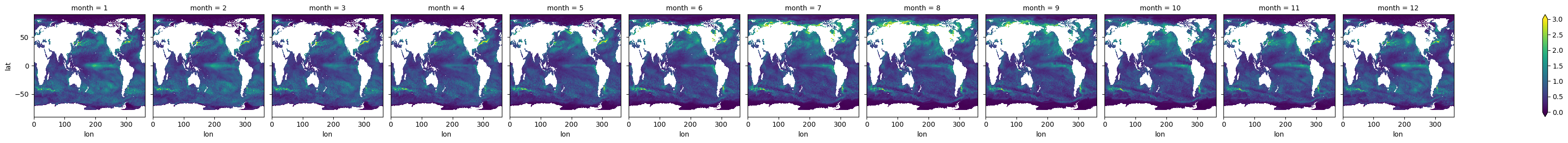

The determined threshold value of each grid of each month is shown below

da_mon_quantile.plot(col='month',vmin=0,vmax=3)

<xarray.plot.facetgrid.FacetGrid at 0x7f3a04adf970>

Identify the marine heatwaves based on threshold#

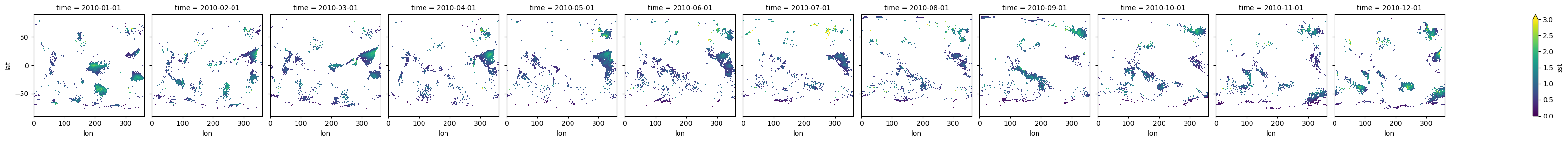

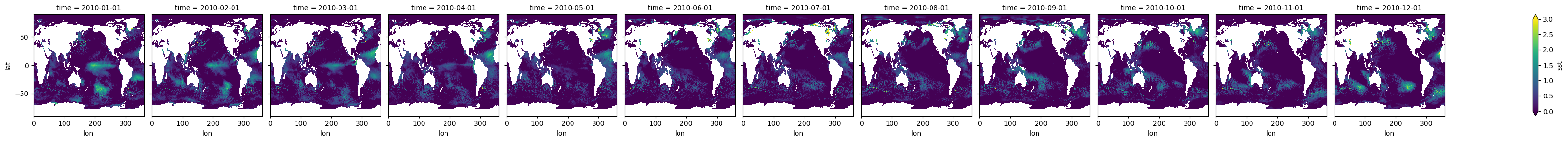

The figure below shows the original SST anomaly value for the first 12 month.

ds_mon_anom.sst.isel(time=slice(0,12)).plot(col='time',vmin=0,vmax=3)

<xarray.plot.facetgrid.FacetGrid at 0x7f39b5344cd0>

To identify the marine heatwaves based on the monthly threshold, we again use a one-liner code to find the monthly marine heatwaves with the grid that has SST anomaly below the threshold to be masked as Not-a-Number.

da_mhw = ds_mon_anom.sst.where(ds_mon_anom.sst.groupby('time.month')>da_mon_quantile)

The figure below shows the SST anomalous values that are above the monthly thresholds for the first 12 months.

da_mhw.isel(time=slice(0,12)).plot(col='time',vmin=0,vmax=3)

<xarray.plot.facetgrid.FacetGrid at 0x7f3994627940>